Lecture 22: Parsing Review, Probabilistic CFG

Objectives: Understand parsing ambiguity and probabilistic parsingReference: Ch.8 Analyzing Sentence Structure ( 8.1 Some Grammatical Dilemmas: Ubiquitous Ambiguity, Weighted Grammar)

Parser Review

Let's review parsing results from Homework 8. You likely have realized how Chart Parser is adept at capturing *every possible* tree structure for a given sentence, even the ones that do not make much sense from a human perspective. Following are the parse trees for s6 "the big bully punched the tiny nerdy kid after school":(S

(NP (DET the) (ADJ big) (N bully))

(VP

(VP (V punched) (NP (DET the) (ADJ tiny) (ADJ nerdy) (N kid)))

(PP (P after) (NP (N school)))))

(S

(NP (DET the) (ADJ big) (N bully))

(VP

(V punched)

(NP (DET the) (ADJ tiny) (ADJ nerdy) (N kid))

(PP (P after) (NP (N school)))))

(S

(NP (DET the) (ADJ big) (N bully))

(VP

(V punched)

(NP

(NP (DET the) (ADJ tiny) (ADJ nerdy) (N kid))

(PP (P after) (NP (N school))))))

Also, discuss the following parsing result, for s10 "Homer and his friends from work drank and sang in the bar".

Probabilistic Grammar

A probabilistic context-free grammar (PCFG) is a context-free grammar where each rule has a probability associated with it. In this NLTK book example, a VP has a 40% chance of being composed of 'TV NP', a 30% chance of becoming 'IV', and a 30% chance of becoming 'DatV NP NP'. (TV: transitive verb, IV: intranstive verb, DatV: dative verb) Note that these add up to 100%. It essentially encodes conditional frequency distribution (CFD): given the VP node, how likely is it to have as its children nodes 'TV NP' vs. 'IV' vs. 'DatV NP NP'?A tree generated by such a grammar will have its own probability distribution score, which is used for ambiguity resolution. A PCFG parser computes the probability score of the entire tree, which can then be used as a basis for ranking between multiple tree structures. The Viterbi parser nltk.ViterbiParser() is such a probabilistic parser.

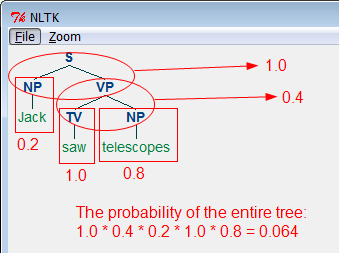

But how does one compute the probability of an entire tree? It is essentially the product of the probabilities of the component trees. That is, it can be obtained by multiplying the individual probability of each CFG rule used in the tree. Therefore, the probability of 0.064 for the sentence "Jack saw telescopes" in this example is derived as follows:

Given this, you should be able to see how a resource such as a syntactically annotated treebank can be useful. The probability estimation of a particular context-free rule can be obtained from the corpus. Interestingly enough, in Penn Treebank 'NP -> NP PP' has a higher likelihood than 'NP -> DT NN'; as a matter of fact, it's the highest-ranking NP rule:

Likewise, below are the top-ranked lexical adjective rules ('JJ -> ...') and their probabilities, again compiled from the Penn Treebank.

NP rule probability NP -> NP PP 0.09222728039116507 NP -> DT NN 0.0851458438711853 NP -> -NONE- 0.05163547462485247 NP -> NN 0.04678806272129489 NP -> NNS 0.041982802225594334

Incorporating such statistical information, CFGs such as grammar1 can be made into a probabilistic context-free grammar. An example:

JJ rule probability JJ -> 'new' 0.027768255056564963 JJ -> 'other' 0.02296880356530682 JJ -> 'last' 0.014741172437435722 JJ -> 'many' 0.014226945491943779 JJ -> 'such' 0.01405553651011313

S -> NP VP [0.7]| AUX NP VP [0.05]| NP AUX VP [0.25]

CP -> COMP S [1.0]

NP -> DET N [0.13]| DET N N [0.05]| DET ADJ N [0.07]| DET ADJ ADJ N [0.03]

NP -> N [0.12]| N N [0.05]| PRO [0.1]

NP -> NP PP [0.3]| NP CP [0.1]| NP CONJ NP [0.05]

ADJP -> ADJ [0.6]| ADJP CONJ ADJP [0.15]| ADV ADJ [0.25]

ADVP -> ADV ADV [1.0]

VP -> V [0.1]| V ADJP [0.1]| V NP [0.19]| V NP CP [0.1]| V NP NP [0.05]| V NP PP [0.01]

VP -> VP PP [0.25]| VP PP PP [0.05]| VP ADVP [0.1]| VP CONJ VP [0.05]

PP -> P NP [1.0]

ADJ -> 'big' [0.2]| 'happy' [0.2]| 'nerdy' [0.05]| 'old' [0.35]| 'poor' [0.15]| 'tiny' [0.05]

ADV -> 'much' [0.4]| 'very' [0.6]

AUX -> 'had' [0.4]| 'must' [0.2]| 'will' [0.4]

COMP -> 'that' [1.0]

CONJ -> 'and' [0.7]| 'but' [0.3]

DET -> 'a' [0.25]| 'her' [0.1]| 'his' [0.1]| 'my' [0.1]| 'the' [0.35]| 'their' [0.1]

N -> 'Homer' [0.04]| 'Lisa' [0.03]| 'Marge' [0.03]| 'Tuesday' [0.01]| 't' [0.02]

N -> 'bar' [0.03]| 'book' [0.05]| 'brother' [0.05]| 'bully' [0.05]| 'butter' [0.04]

N -> 'cat' [0.1]| 'children' [0.05]| 'donut' [0.03]| 'friends' [0.1]| 'ham' [0.02]

N -> 'kid' [0.03]| 'park' [0.03]| 'peanut' [0.02]| 'sandwich' [0.02]

N -> 'school' [0.1]| 'sister' [0.05]| 'table' [0.04]| 'work' [0.06]

P -> 'after' [0.1]| 'from' [0.15]| 'in' [0.25]| 'on' [0.15]| 'to' [0.25]| 'with' [0.1]

PRO -> 'I' [0.3]| 'he' [0.25]| 'him' [0.2]| 'she' [0.25]

V -> 'are' [0.2]| 'ate' [0.1]| 'died' [0.05]| 'drank' [0.06]| 'gave' [0.05]| 'given' [0.05]

V -> 'liked' [0.1]| 'make' [0.1]| 'play' [0.1]| 'punched' [0.04]| 'sang' [0.05]| 'told' [0.1]

Thusly, a probabilistic parser based on a PCFG computes the probability of each parse tree using the probabilities of the individual CF rules used in the tree. Syntactic ambiguities, then, can be resolved in favor of a tree among the list of possible parses that has the highest probability. Our favorite Chart Parser comes with a few probabilistic variants, and "Inside Chart Parser" below is built on the PCFG above. It successfully parses s3 "Homer ate the donut on the table" and outputs a list of parses sorted in the order of probability. The top ranking parse is where 'on the table' modifies 'the donut', which matches our intuition.

|